Insurance copay meaning. Because Cloud SQL is fully managed, it automatically backs, replicates and encrypts your data. It also applies patches when needed and manages capacity increases and drops. Oh, and according to Google, Cloud SQL is more than 99.95% available from anywhere in the world. The fact that Cloud SQL is fully managed only enhances its. This video shows how to create a MySQL instance in Google Cloud Platform (GCP) and then connect using DBeaver.DBeaver can be downloaded for free from https. DBeaver is a universal database management tool for everyone who needs to work with data in a professional way. With DBeaver you are able to manipulate with your data like in a regular spreadsheet, create analytical reports based on records from different data sources, export information in an appropriate format. Visualize and optimize slow queries from Google Cloud SQL. The next and final step will be to install EverSQL's Chrome extension - no configuration needed. Once you're done, you can navigate to the Logging tab in the Google Cloud logs dashboard, and start scrolling through the logs, or navigating to a specific point in time where you suspect a.

Google Cloud Dataflow

Last Updated: 2020-May-26

desktop. BIAS FX 2 Pro The most powerful tone-shaping software that lets you explore and create your signature guitar tone! Listening to your favorite song reduces anxiety levels helps reduce pain: music therapy releases endorphins, which act as natural pain relievers. BIAS FX 2 Pro The most powerful tone-shaping software that lets you explore and create your signature guitar tone! Listening to your favorite song reduces anxiety levels helps reduce pain: music therapy releases endorphins, which act as natural pain relievers. In fact, there are multiple studies that show that music can reduce the pain of.

Key features

Flexible resource scheduling pricing for batch processing

For processing with flexibility in job scheduling time, such as overnight jobs, flexible resource scheduling (FlexRS) offers a lower price for batch processing. These flexible jobs are placed into a queue with a guarantee that they will be retrieved for execution within a six-hour window.

- How to submit a SQL statement as a Dataflow job in the Dataflow SQL UI.

- How to navigate to the Dataflow Pipeline.

- Explore the Dataflow graph created by the SQL statement.

- Explore monitoring information provided by the graph.

- A Google Cloud Platform project with Billing enabled.

- Google Cloud Dataflow and Google Cloud PubSub enabled.

Ensure that you have the Dataflow API and Cloud Pub/Sub API enabled. You can verify this by checking on the API's & Services page.

The Dataflow SQL UI is a BigQuery web UI setting for creating Dataflow SQL jobs. You can access the Dataflow SQL UI from the BigQuery web UI.

- Go to the BigQuery web UI.

- Switch to the Cloud Dataflow engine.

- Click the More drop-down menu and select Query settings.

- In the Query settings menu, select Dataflow engine.

- In the prompt that appears if the Dataflow and Data Catalog APIs are not enabled, click Enable APIs.

- Click Save.

Note: The pricing for the Cloud Dataflow engine is different than the pricing for the BigQuery engine. For details, see Pricing.

You can also access the Dataflow SQL UI from the Dataflow monitoring interface.

- Go to the Dataflow monitoring interface.

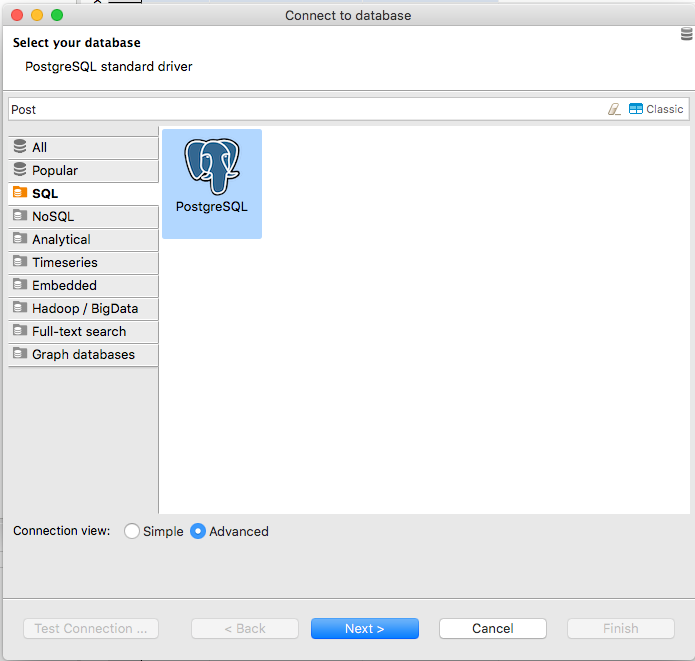

Dbeaver Google Cloud Sql Database

- Click Create job from SQL.

Running Dataflow SQL queries

When you run a Dataflow SQL query, Dataflow turns the query into an Apache Beam pipeline and executes the pipeline.

You can run a Dataflow SQL query using the Cloud Console or gcloud command-line tool.

To run a Dataflow SQL query, use the Dataflow SQL UI.

- Go to the Dataflow SQL UI.

- Click Create.

Dbeaver Google Cloud Sql Login

Note: Starting a Dataflow SQL job might take several minutes. You cannot update a Dataflow SQL job after creating it.

For more information about querying data and writing Dataflow SQL query results, see Using data sources and destinations.

desktop. BIAS FX 2 Pro The most powerful tone-shaping software that lets you explore and create your signature guitar tone! Listening to your favorite song reduces anxiety levels helps reduce pain: music therapy releases endorphins, which act as natural pain relievers. BIAS FX 2 Pro The most powerful tone-shaping software that lets you explore and create your signature guitar tone! Listening to your favorite song reduces anxiety levels helps reduce pain: music therapy releases endorphins, which act as natural pain relievers. In fact, there are multiple studies that show that music can reduce the pain of.

Key features

Flexible resource scheduling pricing for batch processing

For processing with flexibility in job scheduling time, such as overnight jobs, flexible resource scheduling (FlexRS) offers a lower price for batch processing. These flexible jobs are placed into a queue with a guarantee that they will be retrieved for execution within a six-hour window.

- How to submit a SQL statement as a Dataflow job in the Dataflow SQL UI.

- How to navigate to the Dataflow Pipeline.

- Explore the Dataflow graph created by the SQL statement.

- Explore monitoring information provided by the graph.

- A Google Cloud Platform project with Billing enabled.

- Google Cloud Dataflow and Google Cloud PubSub enabled.

Ensure that you have the Dataflow API and Cloud Pub/Sub API enabled. You can verify this by checking on the API's & Services page.

The Dataflow SQL UI is a BigQuery web UI setting for creating Dataflow SQL jobs. You can access the Dataflow SQL UI from the BigQuery web UI.

- Go to the BigQuery web UI.

- Switch to the Cloud Dataflow engine.

- Click the More drop-down menu and select Query settings.

- In the Query settings menu, select Dataflow engine.

- In the prompt that appears if the Dataflow and Data Catalog APIs are not enabled, click Enable APIs.

- Click Save.

Note: The pricing for the Cloud Dataflow engine is different than the pricing for the BigQuery engine. For details, see Pricing.

You can also access the Dataflow SQL UI from the Dataflow monitoring interface.

- Go to the Dataflow monitoring interface.

Dbeaver Google Cloud Sql Database

- Click Create job from SQL.

Running Dataflow SQL queries

When you run a Dataflow SQL query, Dataflow turns the query into an Apache Beam pipeline and executes the pipeline.

You can run a Dataflow SQL query using the Cloud Console or gcloud command-line tool.

To run a Dataflow SQL query, use the Dataflow SQL UI.

- Go to the Dataflow SQL UI.

- Click Create.

Dbeaver Google Cloud Sql Login

Note: Starting a Dataflow SQL job might take several minutes. You cannot update a Dataflow SQL job after creating it.

For more information about querying data and writing Dataflow SQL query results, see Using data sources and destinations.

When you execute your pipeline using the Dataflow managed service, you can view that job and any others by using Dataflow's web-based monitoring user interface. The monitoring interface lets you see and interact with your Dataflow jobs.

Dbeaver Google Cloud Sql Client

You can access the Dataflow monitoring interface by using the Google Cloud Console. The monitoring interface can show you:

- A list of all currently running Dataflow jobs and previously run jobs within the last 30 days.

- A graphical representation of each pipeline.

- Details about your job's status, execution, and SDK version.

- Links to information about the Google Cloud services running your pipeline, such as Compute Engine and Cloud Storage.

- Any errors or warnings that occur during a job.

You can view job monitoring charts within the Dataflow monitoring interface. These charts display metrics over the duration of a pipeline job and include the following information:

- Step-level visibility to help identify which steps might be causing pipeline lag.

- Statistical information that can surface anomalous behavior.

- I/O metrics that can help identify bottlenecks in your sources and sinks.

The Job details page, which contains the following:

- Job graph: the visual representation of your pipeline

- Job metrics: metrics about the execution of your job

- Job info panel: descriptive information about your pipeline

- Job logs: logs generated by the Dataflow service at the job level

- Worker logs: logs generated by the Dataflow service at at the worker level

- Job error reporting: charts showing where errors occurred along the chosen timeline and a count of all logged errors

- Time selector: tool that lets you adjust the timespan of your metrics

Within the Job details page, you can switch your job view with the Job graph and Job metrics tab.

- Click on the JOB METRICS tab and explore the charts

To stop Dataflow SQL jobs, use the Cancel command. Stopping a Dataflow SQL job with Drain is not supported.